|

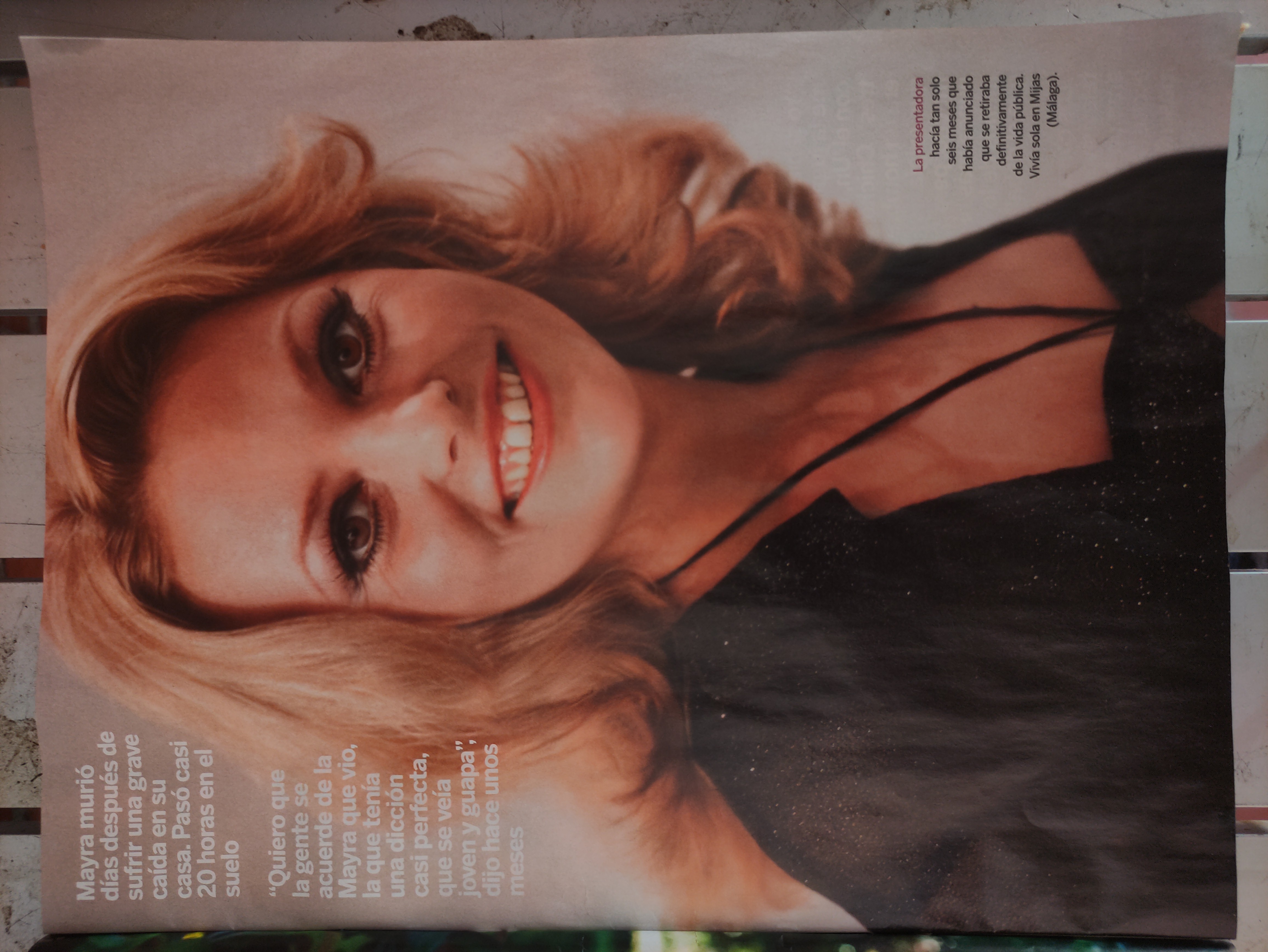

In Sweden, one in four people die alone, and in Spain, with the population pyramid completely inverted (now looking more like a spinning top), it’s only a matter of time before this happens as well: neighbors calling the police because of a bad smell, only to find a body that needs to be removed. A striking case is that of Mayra Gomez Kem (the host of “1 2 3”), who spent 20 hours lying on the floor until someone finally noticed and called for help. The first 10 hours must have been hard, but the last 10, thinking you’re going to die lying there, must have been even worse. We’re going to explore the possibility of creating an agent that roams around the house and raises an alert if it sees someone lying on the floor, triggering an actuator that presses the Red Cross emergency button. |

|

Beyond the Uncanny Valley

Would you leave a humanoid robot switched on in the kitchen surrounded by knives and go to sleep peacefully? Not everyone would.

But there’s one platform we’re already comfortable with, one that doesn’t scare us: the “roomba.”

For elderly people, a platform like the enabot, capable of withstanding kicks and cane hits, would be more suitable — but that’s for later.

Proof of Concept

We’ll use a recycled Roomba 620 with a video camera mounted on top.

The Roomba is connected to an ESP32 Wroom with a magnetometer (AKA compass) and an accelerometer. The technical hardware details and schematics will be explained in another post.

If we want this to be a household appliance suitable for any home, we cannot depend on subscriptions, which may be subject to arbitrary regulations created by arbitrary politicians. So the model has to run on-prem.

I’ve used Ollama as the LLM server, and after searching through the catalog models, there’s none that can run on 16GB with both vision and tools. After spending quite some time with gemma3, which has vision, I realized it can return responses in JSON format. With the right prompt, we can parse the chat output and use it to control the robot.

Model Server

Hardware

For this use case, a CPU won’t do, since we’re working in a real-time environment and inference on CPU with image processing is absurdly slow.

We need a GPU capable of handling the model. I bought an NVIDIA RTX 4060ti with 16GB of VRAM, which is enough to run gemma3:12b, along with motherboard, processor, memory, and power supply all focused on supporting the GPU properly.

The case is from a contest I won back in university, which I’m fond of, and I’ve adapted it for modern motherboards. :-D

|

|

Software

I’ve already explained in another post how to set up the Ollama server on Ubuntu, so here’s a quick copy-paste recap.

Kubernetes:

sudo snap install kubectl sudo snap install helm sudo snap install microk8s mkdir ~/.kube microk8s.config > .kube/config sudo microk8s.enable dns sudo microk8s.enable ingress sudo microk8s.enable hostpath-storage sudo microk8s.enable nvidia

Ollama client:

curl -fsSL https://ollama.com/install.sh | sh sudo systemctl stop ollama sudo systemctl disable ollama export OLLAMA_HOST=http://ollama.127-0-0-1.nip.io

Ollama server:

echo """

ollama:

gpu:

enabled: true

type: nvidia

number: 1

ingress:

enabled: true

hosts:

- host: ollama.127-0-0-1.nip.io

paths:

- path: /

pathType: Prefix

persistentVolume:

enabled: true

""" > values.yaml

helm upgrade --install \

ollama \

--repo https://otwld.github.io/ollama-helm/ \

--namespace ollama \

--create-namespace \

--wait \

--values values.yaml \

ollama

rm values.yaml

Once installed, we download the model:

export OLLAMA_HOST=http://ollama.127-0-0-1.nip.io ollama pull gemma3:12b

Software

All code is in this repository.

Input Data

We have a directory with camera images taken once per second and an events file sent by the ESP32 Wroom and received by a simple Flask program that stores them in a shared volume.

Interaction with the LLM

The prompt instructs the LLM with input data, possible actions, and the expected response format:

PROMPT = """

You are the brain of an autonomous robot.

Your task is to decide the next action based on:

1. **Camera image** (provided directly as part of the context).

2. **Sensors data**: distances, obstacles, battery, temperature, tilt, etc.

3. **Current goal**: what you are trying to achieve right now.

### Possible actions

- `forward` (distance in cm)

- `backward` (distance in cm)

- `turn_left` (angle in degrees)

- `turn_right` (angle in degrees)

- `dock` (return to charging base)

- `stop` (stop movement)

- `start` (start movement)

### Response format

Respond **only** in valid JSON (no extra text) with the following structure:

{{

"steps": [

{{ "": }}

],

"goal": "",

"thoughts": ""

"description": ""

}}

"""

The main loop is very simple: grab sensor data, find the closest image, query the LLM, and execute the instructions it provides:

while True:

sensors = get_latest_event(events_file=EVENTS_FILE)

image_path = find_closest_image_path(image_dir=IMAGES_DIRECTORY, target_time=sensors["datetime"])

response = query_llm(sensors, image_path, current_goal)

instructions={}

instructions["steps"] = response["steps"]

execute_instructions(instructions)

The trickiest part is in the query_llmfunction, where since the model doesn’t have tools, we parse the chat output to extract the JSON:

def query_llm(sensors_data, image_path, current_goal, PROMPT=PROMPT):

composed_prompt = PROMPT.format(sensors_data=sensors_data, current_goal=current_goal)

response = ollama.chat(

model="gemma3:12b",

messages=[

{

"role": "user",

"content": composed_prompt,

"images": [image_path]

}

]

)

# Extract only the JSON block from answer

raw = response.message.content.splitlines()

inside_block = False

filtered_lines = []

for line in raw:

if line.strip().startswith("```"):

inside_block = not inside_block

continue

if inside_block:

filtered_lines.append(line)

json_str = "\n".join(filtered_lines).strip()

parsed = json.loads(json_str)

return(parsed)

And the result is:

Surprisingly, the model has NEVER failed to return a properly formatted JSON.

Lessons Learned for the Next Iteration

Spatial vision is crucial at short distances, or more sensors are needed. When approaching a wall, the LLM interprets the photo as blurry and uninformative.

To rely on images alone, two cameras are necessary, and the model must be trained with a composite image so it learns depth perception.

The camera needs about 180-degree coverage to fully understand its environment, even if the images get distorted.

Sensor data is sent too slowly. In this prototype, it could be improved by fetching all sensor data simultaneously.

Who Wants to Join Me on This Journey?

This project could help address a very real problem affecting millions of people — one that nobody seems to care about.

And I don’t mean so much for myself, since I have three kids and hope at least one of them will look after me. ;-)